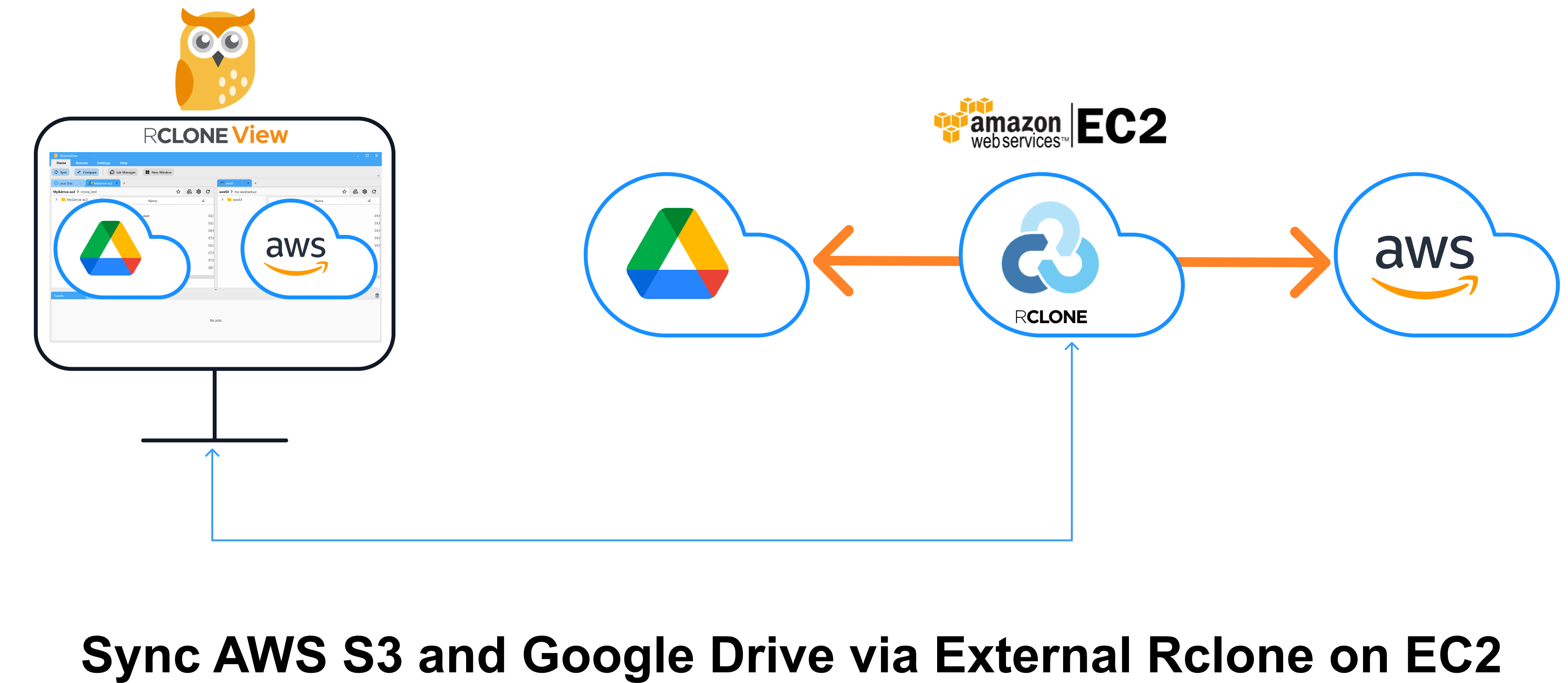

Sync AWS S3 and Google Drive via External Rclone on EC2

Synchronizing data between cloud storage services (e.g., Google Drive ↔ AWS S3) using RcloneView is simple thanks to its built-in Embedded Rclone. When you install RcloneView, this embedded engine is automatically included and is typically used to manage file transfers from your local PC.

However, using the Embedded Rclone means that all transfer traffic flows through your local computer. This can slow things down significantly—especially when syncing large datasets or operating over a slower network.

For example, syncing files from Google Drive to AWS S3 using Embedded Rclone involves downloading files to your local machine and then uploading them to S3. This double-transfer not only adds latency but also consumes your local bandwidth.

A better solution in this case is to run Rclone directly on a cloud instance—like an AWS EC2 server. By connecting RcloneView to that External Rclone running on EC2, you can:

- Avoid routing traffic through your local PC

- Perform cloud-to-cloud transfers directly within the cloud (Google → EC2 → S3)

- Take advantage of higher-speed cloud network infrastructure

This architecture significantly improves performance and reduces the load on your local device.

In this tutorial, we’ll walk you through how to use RcloneView’s New Window feature to connect to an External Rclone on EC2, then sync files between Google Drive and AWS S3 entirely from the cloud.

Running Rclone on an EC2 instance can lead to faster transfers, but note that AWS may charge for compute usage and outbound data transfer to other services.

This guide walks you through how to:

- Launch and configure Rclone on an AWS EC2 instance

- Open a new RcloneView window

- Connect to the External Rclone on EC2

- Add Google Drive and AWS S3 remotes

- Synchronize files directly between them with improved performance

Part 1: Deploy Rclone on EC2 (External Rclone)

Follow the AWS EC2 guide to launch Ubuntu, open port 5572, install Rclone, and run the rcd daemon

👉 See: How to Run Rclone on AWS EC2 Server

Key points:

rclone rcdis running with--rc-addr=0.0.0.0:5572- Open port

5572in your EC2 Security Group - HTTP Basic auth is set (

--rc-user,--rc-pass) - Run the daemon with:

rclone rcd --rc-user=admin --rc-pass=admin --rc-addr=0.0.0.0:5572

- You verify access via:

curl -X POST -u admin:admin "http://<EC2-PUBLIC-IP-or-DNS>:5572/rc/noop"

Part 2: Open a New RcloneView Window

To keep connections organized, RcloneView allows each window to operate with its own Rclone engine.

-

Launch RcloneView

-

Click the

New Windowbutton from theHomemenu -

A new application window will open. This instance is independent of the main window and will maintain its own connection context.

📌 You’ll connect this window to your External Rclone (EC2) in the next step.

👉 Learn more: Using Multiple Rclone Connections with New Window

Part 3: Connect EC2-hosted External Rclone

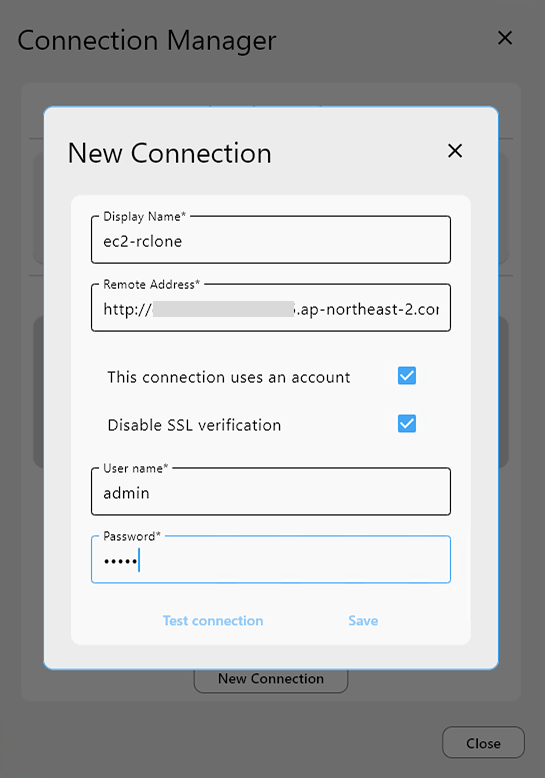

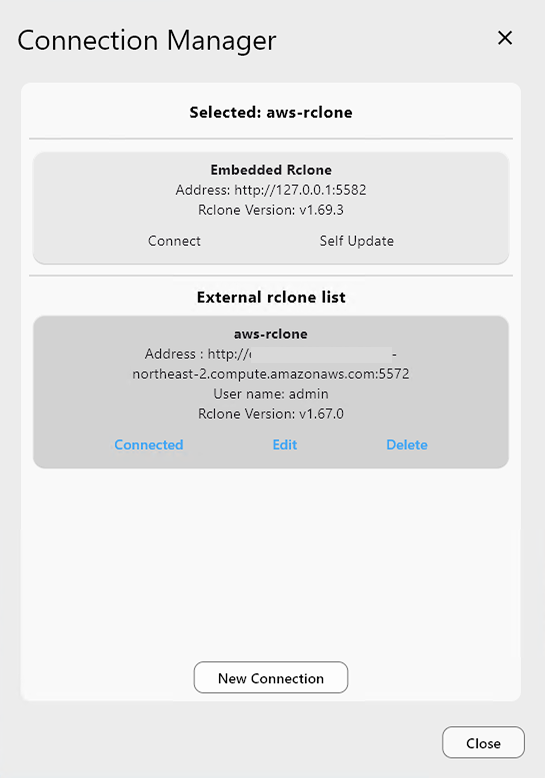

In the newly opened window, follow these steps to connect to your EC2-hosted External Rclone:

-

Open

Connection Managerfrom theSettingsmenu. -

Click

New Connectionto create a new Rclone connection profile. -

Fill in the required fields:

-

Display Name:

ec2-rclone(or any name you prefer) -

Remote Address:

http://<EC2-PUBLIC-IP-or-DNS-NAME>:5572 -

Username / Password: Use the values you set in Part 1 when starting the Rclone daemon

(e.g.,--rc-user=admin,--rc-pass=admin)

-

-

Click

Test Connectionto verify the setup.

You should see a successful connection response. -

If the test passes, click

Save, thenConnect. -

You are now connected to your External Rclone instance running on EC2, and the connection status should appear at the top of the window.

👉 Learn more: Add a New External Rclone

Part 4: Add AWS S3 & Google Drive Remotes (via External Rclone)

Still in the EC2-connected RcloneView window:

➕ Add AWS S3 Remote

-

Click

+ New RemoteinRemotemenu -

In the Provider tab, search for and select S3

-

In the

Optionstab:-

Set

Providerto AWS -

Enter your AWS

Access Key IDandSecret Access Key -

Set

Regionand optionally set Endpoint for S3-compatible services

-

-

Click Save, name it (e.g., ec2-s3)

👉 Learn more: Add AWS S3 Remote

➕ Add Google Drive Remote (Using OAuth Access Token)

Instead of completing a new browser-based login flow, you can follow the steps in the guide below to use the OAuth Access Token obtained earlier:

👉 See: Set Up Google Drive on External Rclone Without Browser

- Go to

+ New Remotefrom theRemotemenu - Select Google Drive as the provider

- In the Options tab, scroll down and click Show advanced options

- Paste the previously copied token into the

tokenfield - Name the remote (e.g.,

ec2-gdrive) and save

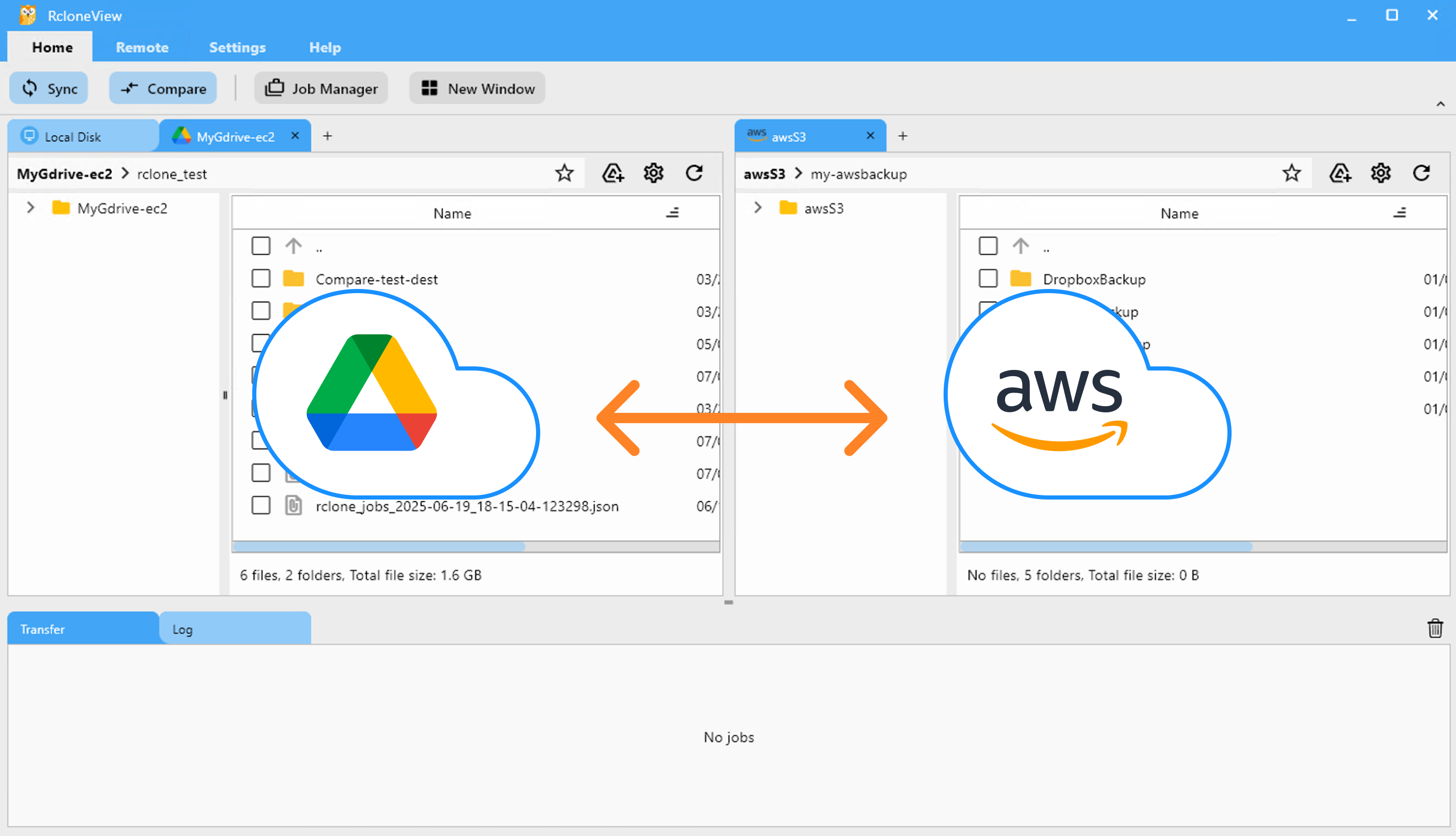

Part 5: Sync Files Between AWS S3 and Google Drive

You can now transfer files between Google Drive and S3 via your EC2 Rclone instance.

📁 Method A: Compare and Sync On Demand

-

Go to the Browse tab

-

Load Google Drive remote in the left pane (ec2-gdrive:)

-

Load AWS S3 remote in the right pane (ec2-s3:)

-

Click Compare in the top menu

-

Review differences:

-

Files on one side only

-

Different sizes

-

Identical matches

-

-

Use Copy →, ← Copy, or Delete to sync

💡 Progress is shown in the status bar and transfer log tab.

👉 Learn more: Compare Folder Contents

🕒 Method B: Set Up a Scheduled Sync Job

-

Go to Home → Job Manager, then click Add Job

-

Select Sync

-

Source: ec2-gdrive:folder

-

Destination: ec2-s3:folder

-

-

Configure:

-

Sync direction

-

Filtering rules (optional)

-

-

(Optional) Enable Scheduling

-

Set time pattern

-

Preview the schedule with the built-in simulator

-

-

Click Save & Enable

Once scheduled, RcloneView will execute syncs automatically using your EC2-hosted Rclone backend.

👉 Learn more:

Final Check

-

Confirm your sync completed successfully via Transfer Log or Job History pane

-

Run periodic checks on EC2 to confirm it remains connected and responsive

🔗 Related Guides

- How to Run Rclone on AWS EC2 Server

- Using Multiple Rclone Connections with New Window

- Add a New External Rclone

- Add AWS S3 Remote

- Compare Folder Contents

- Synchronize Remote Storages

- Create Sync Jobs

- Execute & Manage Jobs

- Job Scheduling and Execution