Fix Google Drive Quota, Rate Limit, and API Errors with RcloneView

Tired of

userRateLimitExceeded,quotaExceeded, or random 429 responses? RcloneView gives Google Drive power users a GUI toolkit to predict, work around, and recover from API throttling without babysitting scripts.

Whether you are archiving media libraries, consolidating Shared Drives, or syncing MEGA into Google Workspace, everything eventually hits Drive’s limits:

- 750 GB/day upload & copy quota per user

- 5 TB maximum file size (non-Google Docs formats)

- Burst-limited API calls (

userRateLimitExceeded,rateLimitExceeded, 429) - Occasional backend hiccups (5xx, connection resets)

Instead of trial-and-error CLI runs, this guide shows how to keep jobs flowing through RcloneView’s Explorer, Scheduler, and diagnostics so every transfer resumes exactly where it left off.

Understand Drive errors before reacting

| Error text | Root cause | Fix in RcloneView |

|---|---|---|

userRateLimitExceeded, rateLimitExceeded | Too many requests per second from one user/project | Lower Checkers/Transfers, enable --tpslimit, stagger Scheduler windows |

quotaExceeded, 403: insufficient storage | Upload + copy bytes exceeded 750 GB/day OR destination Drive is full | Split jobs by folder, add pauses between batches, pick another account or wait for reset |

403: The user does not have sufficient permissions for file | Wrong Shared Drive or file ownership | Use Compare to inspect paths, verify Shared Drive membership |

5xx backendError / Internal Error | Google transient outage | Enable retries, exponential backoff, and let Scheduler resume |

drive: rateLimitExceeded: Too many requests for this file | Rapidly updating a single file | Enable chunked transfers, throttle concurrency |

RcloneView surfaces these messages in Job History and logs so you can pinpoint the exact timestamp and object that failed.

Step 1 — Baseline your Drive usage

- Check remaining quota: In Google Workspace Admin or Drive settings, confirm available storage for the target user or Shared Drive.

- Segment the data set: Use RcloneView’s Explorer to bucket the migration into logical folders (

Finance FY24,Video RAW, etc.). Drag-and-drop into staging folders to gauge size. - Run Compare: The Compare folders guide helps you preview deltas and avoid copying duplicates that chew through quota.

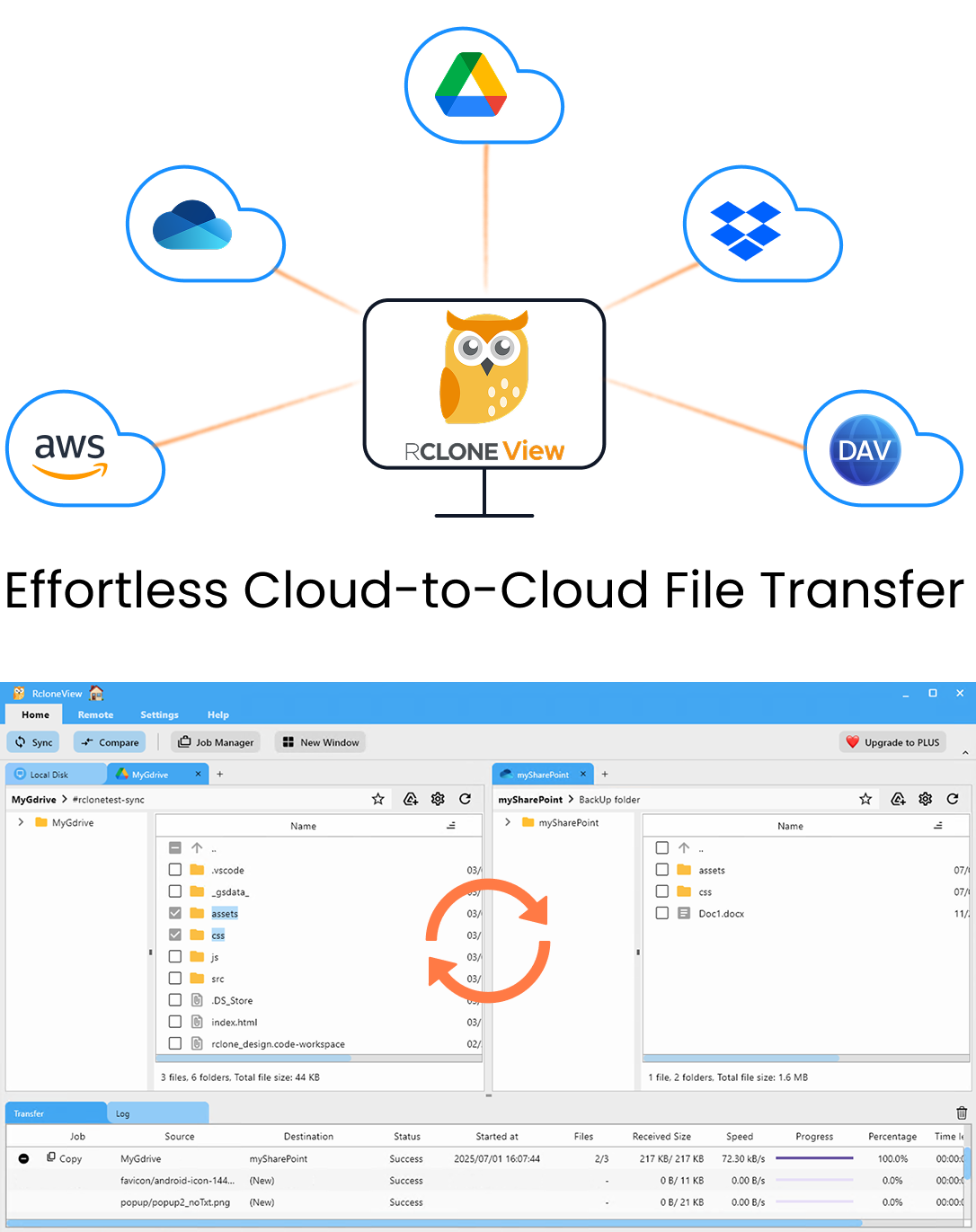

Supported Cloud Providers

Step 2 — Tune transfers before scheduling

Open Job Manager → Add Job (see Create Sync Jobs) and focus on these knobs:

- Transfers vs. Checkers: Set transfers to

4-8for 1 Gbps networks; drop to2when errors spike. Checkers at4keep verification healthy without spamming the API. - Chunk size: Leave defaults unless Google throttles uploads of huge videos; then drop chunk size to reduce burst load.

--drive-stop-on-upload-limit: Enable this flag (checkbox in Advanced options) so RcloneView pauses gracefully once 750 GB is consumed instead of throwing repeated 403s.- Bandwidth caps: In Settings → Transfers, set e.g.,

200 Mbpsso local networks stay calm.

Save the job with a descriptive name like Drive-Master-Library-Sync.

Step 3 — Schedule around quotas

Use the Scheduler (Step 4 of the job wizard) to minimize collisions:

- Toggle Enable Scheduler and select Daily or Hourly windows.

- Run large uploads overnight local time so they overlap with Drive’s quietest hours.

- Chain multiple jobs with staggered start times (e.g.,

01:00,03:30,06:00) so quotas are spread across the reset window. - Turn on Retries (3-5) with exponential backoff. RcloneView auto-resumes exactly where it stopped because rclone stores file checksums and partial transfers.

- Enable Notifications so you get email/webhook alerts whenever Google throws a quota warning.

Example command surfaced in Job Details

rclone copy gdrive-main:Video gdrive-archive:Video ^

--transfers=4 ^

--checkers=4 ^

--drive-stop-on-upload-limit ^

--tpslimit=8 ^

--retries=5 --low-level-retries=10

You never need to run this manually, but it documents the mitigation for audits.

Step 4 — React when errors hit

- 750 GB/day reached: Job pauses with a clear log entry. Duplicate the job, change the source subfolder, or wait for the next midnight UTC reset. Scheduler resumes automatically.

- userRateLimitExceeded: Lower transfers/checkers, add

--tpslimit(Advanced tab), and consider moving API credentials to a dedicated Google Cloud project to gain a larger per-project quota. - 429 Too Many Requests: Set Scheduler to run hourly with smaller batches (use the Include/Exclude filter to split directories). Enable

--drive-chunk-size=64Mto smooth bursts. - Shared Drive permissions: Use Explorer to open the destination at least once; if Drive throws permissions errors, switch to a user that is a Manager/Content Manager on that Shared Drive.

- 5xx: Let the retries fire. If the same object repeatedly fails, mark it as skipped via Compare so you can keep the rest moving while you investigate.

All events are captured in Job History with downloadable logs, so evidence for support tickets or compliance reports is one click away.

Step 5 — Monitor proactively

- Transfer panel: Watch bandwidth graphs and per-file status so you catch throttling instantly.

- Compare after automation: Re-run Compare (Dry Run) to confirm no pending deltas remain once quotas reset.

- Activity timeline: The Scheduler view lists “Last run / Next run / Status” so you know exactly when the pipeline pauses for quotas.

- Notifications: Hook jobs into Slack/email so rate-limit alerts reach the right team before users notice missing files.

- Launch at login: Enable it in Settings so RcloneView + Scheduler survive workstation reboots.

Best practices for Drive-heavy teams

- Rotate service accounts: For Workspace admins, assign separate service accounts per department so quotas are distributed.

- Stage large media locally: Sync first to an on-prem NAS, then let RcloneView mirror that NAS into Drive overnight, breaking up the API usage.

- Tag jobs by priority: Mission-critical data gets nightly windows; non-urgent archives run weekly.

- Document presets: Export job definitions so teammates reuse tuned settings rather than invent new ones that hit rate limits.

- Keep logs: Store RcloneView logs (JSON/CSV) in an audit bucket to prove each quota event was handled.

FAQ

How do I know which file hit the limit?

Open Job History → View Log. The exact file path sits above the error message, so you can rerun only that directory.

Can I work around the 750 GB/day limit?

Not directly. Split data across multiple Google accounts (each with its own quota) or wait for the reset. RcloneView’s filters help segment folders without moving them manually.

Does lowering transfers slow everything down?

It might, but it is better than crashing jobs. Pair lower transfers with more frequent Scheduler runs so the net throughput still meets SLAs.

What if Drive bans my API key?

Create a Google Cloud project solely for RcloneView/rclone, add OAuth credentials, and limit access to trusted operators. Rotate the key if abuse is detected.

Keep Drive migrations healthy

Drive quotas and rate limits are permanent, but with RcloneView you can plan for them, receive early warnings, and automatically resume when Google gives the green light again. Tune jobs once, schedule them, and let the GUI enforce best practices so you never babysit manual retries.

Manage & Sync All Clouds in One Place

RcloneView is a cross-platform GUI for rclone. Compare folders, transfer or sync files, and automate multi-cloud workflows with a clean, visual interface.

- One-click jobs: Copy · Sync · Compare

- Schedulers & history for reliable automation

- Works with Google Drive, OneDrive, Dropbox, S3, WebDAV, SFTP and more

Free core features. Plus automations available.