Hybrid Cloud Made Easy: Combine NAS, S3, and Cloudflare R2 in One Workflow

Bridge your on-prem NAS with S3-compatible clouds and Cloudflare R2 using RcloneView’s visual workflows.

Why hybrid cloud storage is trending in 2025

Teams want LAN-speed collaboration plus cloud durability and edge delivery. That means:

- A NAS (Synology, QNAP, TrueNAS, etc.) keeps day-to-day files close to the team.

- Amazon S3 or Wasabi stores long-term backups, analytics data, or compliance snapshots.

- Cloudflare R2 pushes content to users globally without surprise egress bills.

Juggling these manually is painful—different portals, scripts, and cron jobs. RcloneView unifies them:

- Add NAS (via SMB/NFS/WebDAV), S3-compatible buckets, and Cloudflare R2 in the same Explorer.

- Use Compare, drag-and-drop, and Jobs to automate every leg of the workflow.

- Track history, alerts, and dry runs to keep hybrid operations auditable.

Keywords to remember: hybrid cloud storage, multi-cloud backup automation, S3 compatible NAS, RcloneView workflows.

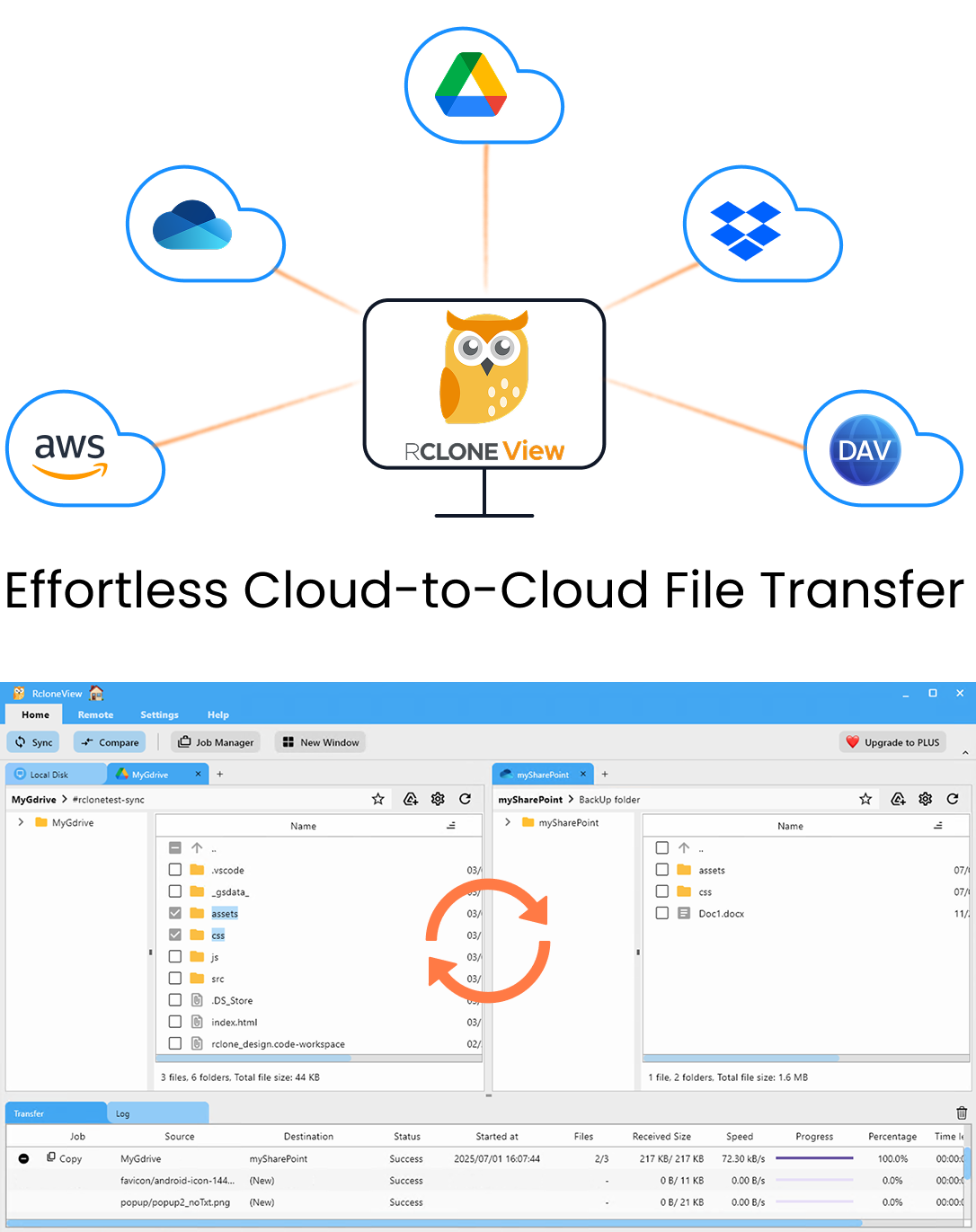

Manage & Sync All Clouds in One Place

RcloneView is a cross-platform GUI for rclone. Compare folders, transfer or sync files, and automate multi-cloud workflows with a clean, visual interface.

- One-click jobs: Copy · Sync · Compare

- Schedulers & history for reliable automation

- Works with Google Drive, OneDrive, Dropbox, S3, WebDAV, SFTP and more

Free core features. Plus automations available.

Reference architecture

- Local NAS tier – Primary collaboration volume or surveillance archive.

- S3 tier – Durable off-site copy with lifecycle policies (Std → Glacier/IA).

- Cloudflare R2 tier – Edge-friendly repository for web sites, downloads, or CDN workloads.

RcloneView becomes the control plane. You can visually orchestrate:

- NAS → S3 nightly backups.

- S3 → R2 replication for distribution.

- On-demand restores from R2/S3 down to NAS for local editing.

Step 1 – Prep your endpoints

- NAS: Enable an S3-compatible service (many NAS devices expose MinIO-style gateways) or enable SMB/WebDAV for direct mounts.

- S3: Create dedicated IAM users with bucket-level permissions.

- Cloudflare R2: Generate API tokens scoped to the required buckets.

- Connectivity: Verify NAS can reach the internet via HTTPS; open ports if running reverse proxies.

- Cost planning: Model data flows—NAS→S3 traffic leaves your ISP, S3→R2 incurs egress from S3 only.

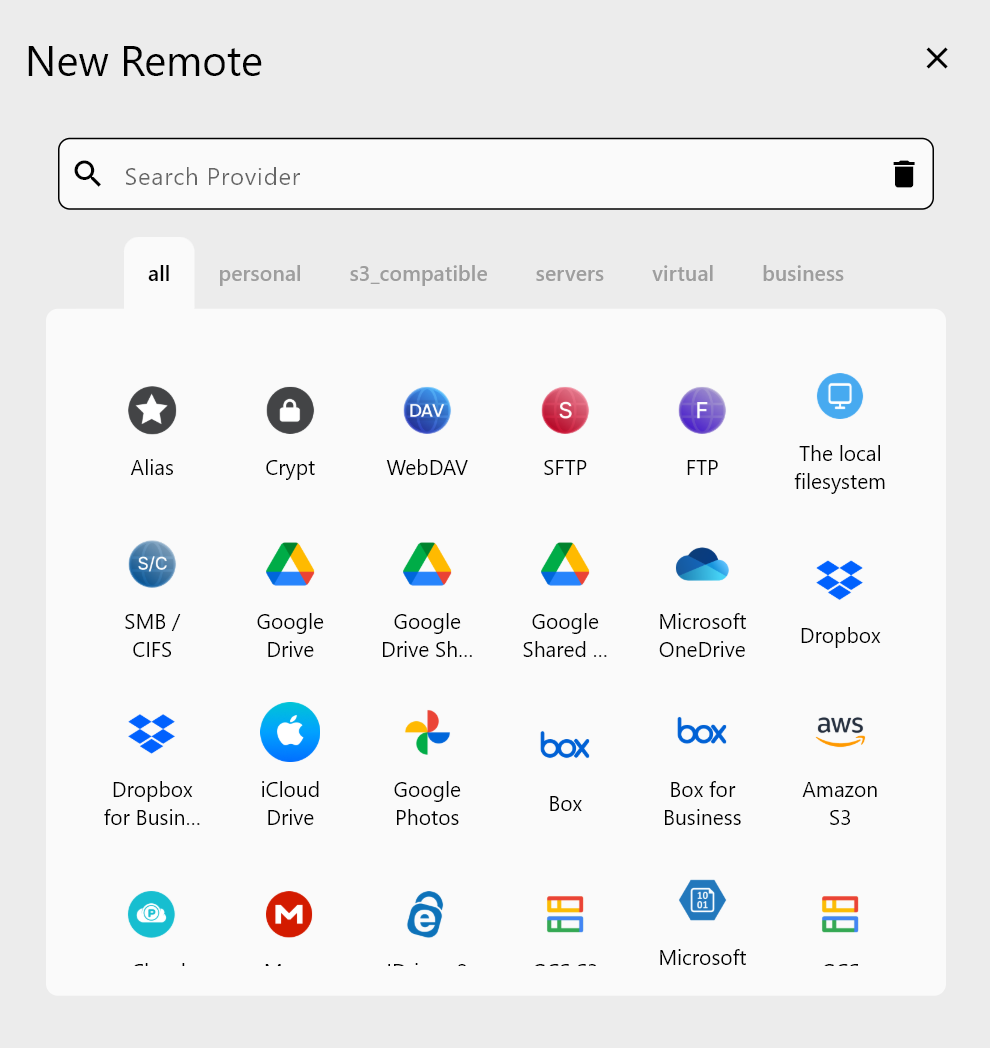

Step 2 – Add remotes in RcloneView

- Launch RcloneView →

+ New Remote. - Pick the backend type:

- S3 compatible for Amazon, Wasabi, or your NAS gateway (enter custom endpoint/IP).

- WebDAV/SMB if your NAS exposes file shares.

- Cloudflare R2 using the account-specific endpoint.

- Give each remote a clear label (

NAS_Studio,S3_Archive,R2_Distribution). - Test connections; they should appear in the Explorer pane ready for drag-and-drop transfers.

🔍 Helpful docs: S3 connection settings

Step 3 – Build hybrid workflows

A) NAS → S3 backup lane

- Use Compare to preview NAS folders against S3 buckets.

- Run Sync with

--backup-direnabled to move changed files into dated prefixes. - Save as a Job (

NAS_to_S3_Nightly) and schedule during off-hours.

B) S3 → Cloudflare R2 publishing lane

- After backups land in S3, duplicate key prefixes into R2 for low-latency delivery.

- Use Dry Run first to confirm object counts.

- Enable notifications so the web team knows when new builds hit R2.

C) R2/S3 → NAS restore lane

- Open a dual-pane view (R2 left, NAS right).

- Drag specific folders back for local editing or disaster recovery.

- Track restores in Job History to prove RPO/RTO compliance.

Automation playbook

- Template jobs: Clone base jobs (e.g., “NAS→S3 Sync”) for each department to keep rules consistent.

- Tag schedules: Prefix job names with

[Backup],[Publish],[Restore]for fast filtering. - Use retention rules: Pair RcloneView jobs with S3 lifecycle policies so warm data ages into cheaper tiers automatically.

- Monitor telemetry: Export job logs weekly and ship to your SIEM or Slack to keep stakeholders looped in.

- Test failover: Quarterly, simulate a NAS outage and restore from S3/R2 to validate documentation.

Troubleshooting tips

- Slow NAS uploads? Enable multipart uploads and increase concurrency in Job settings.

- Mismatched timestamps? Use Compare’s metadata pane to identify time-zone drift before syncing.

- Permission errors? Double-check IAM policies for S3 and token scopes in R2; NAS shares may require service accounts.

- Version conflicts? Turn on

--checksumfor critical archives or enable backup directories to retain old revisions. - Bandwidth spikes? Throttle jobs during business hours and let off-hours windows run at full speed.

FAQs

Q. Do I need CLI access on the NAS?

A. No. As long as the NAS exposes an S3/WebDAV/SMB endpoint reachable from the machine running RcloneView, you can manage it entirely from the GUI.

Q. Can I encrypt data in transit between NAS and S3?

A. Yes. Use HTTPS endpoints and optionally enable rclone’s server-side encryption parameters when configuring the remote inside RcloneView.

Q. What’s the best way to handle large media libraries?

A. Break them into prefix-based jobs (e.g., /projects/a-m/) and stagger schedules to stay within API rate limits.

Q. Does Cloudflare R2 charge for ingress when pulling from S3?

A. No, but S3 will charge egress. Budget for that when planning the S3 → R2 publishing lane.