Warm-Standby Disaster Recovery Across Clouds with RcloneView (S3, Wasabi, R2, OneDrive)

· 4 min read

Keep a live copy of production data in another region or cloud and switch within minutes when incidents strike.

Warm-standby DR pairs a primary location (e.g., AWS S3 or OneDrive) with a continuously updated standby (e.g., Cloudflare R2 or Wasabi). RcloneView layers a GUI over rclone so you can schedule steady syncs, validate drift with Compare, and mount the standby for rapid failover—without shell scripts.

Relevant docs

- Create Sync Jobs: https://rcloneview.com/support/howto/rcloneview-basic/create-sync-jobs

- Job Scheduling & Execution (Plus): https://rcloneview.com/support/howto/rcloneview-advanced/job-scheduling-and-execution

- Mount as local drive: https://rcloneview.com/support/howto/rcloneview-basic/mount-cloud-storage-as-a-local-drive

- Compare folders: https://rcloneview.com/support/howto/rcloneview-basic/compare-folder-contents

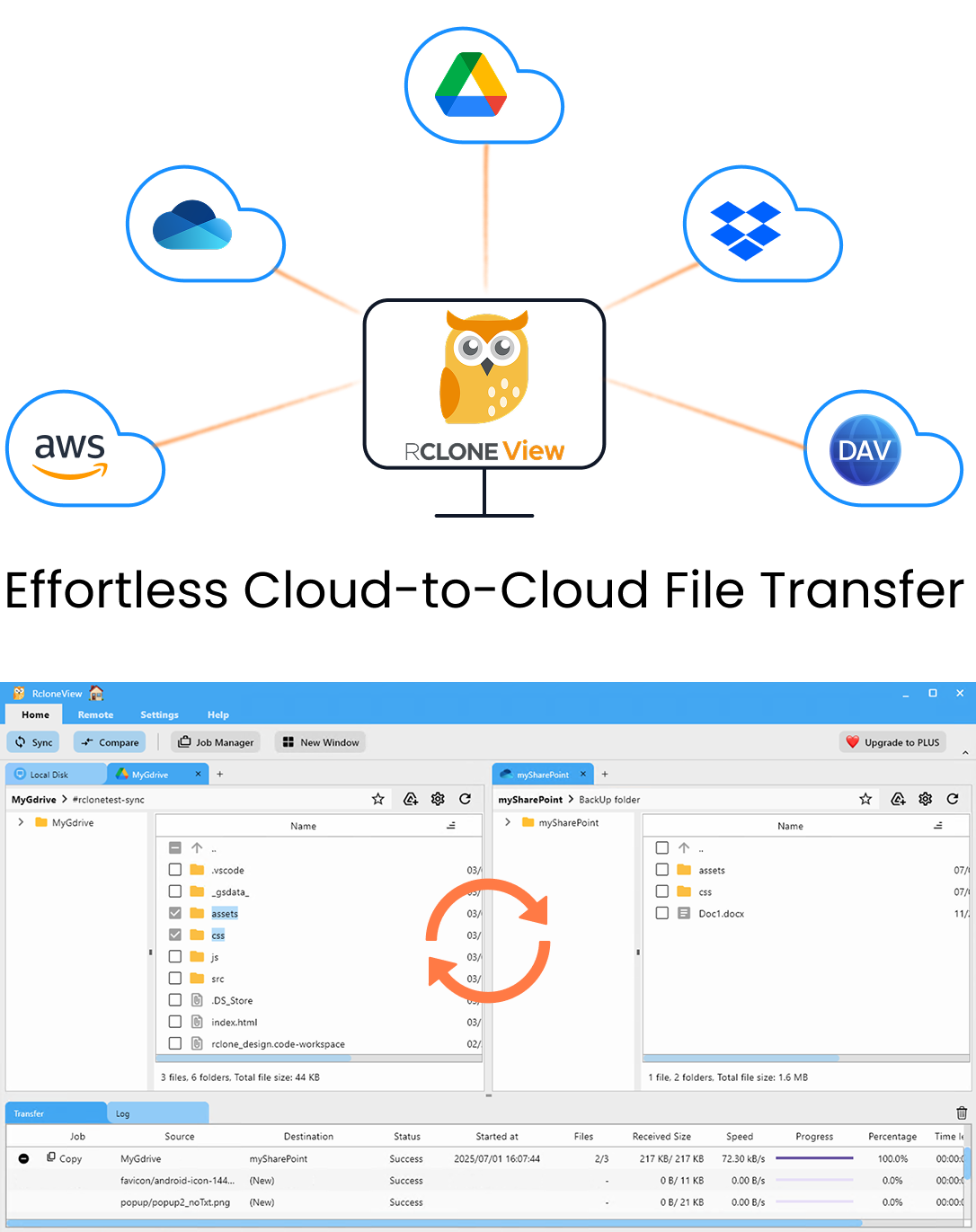

Manage & Sync All Clouds in One Place

RcloneView is a cross-platform GUI for rclone. Compare folders, transfer or sync files, and automate multi-cloud workflows with a clean, visual interface.

- One-click jobs: Copy · Sync · Compare

- Schedulers & history for reliable automation

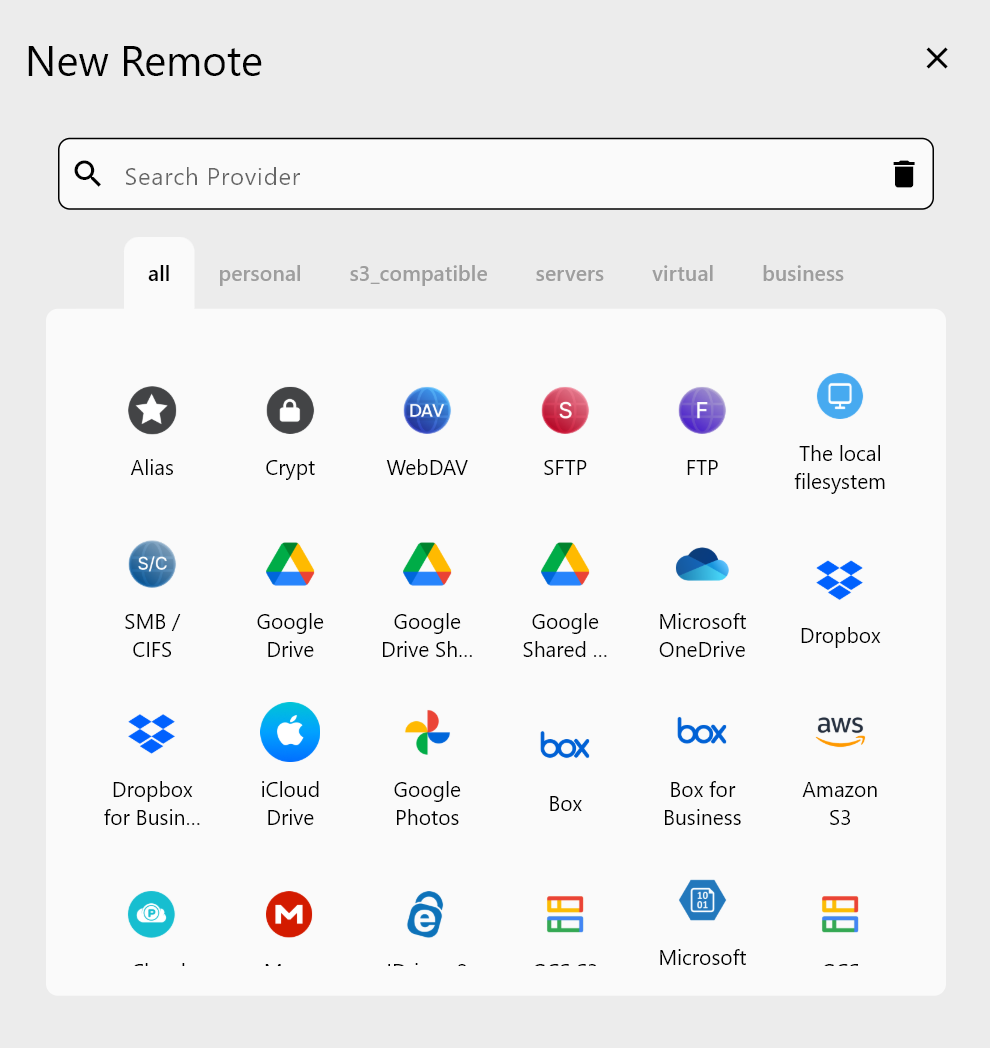

- Works with Google Drive, OneDrive, Dropbox, S3, WebDAV, SFTP and more

WindowsmacOSLinux

Get Started Free →Free core features. Plus automations available.

Why warm-standby with RcloneView

- Faster recovery: Standby copies are within minutes/hours of primary, not days.

- Cloud choice: Mix S3, Wasabi, R2, B2, Google Drive, Dropbox, or OneDrive.

- No scripts: Build jobs in a wizard, not YAML/cron.

- Visible drift: Compare highlights mismatches before you need to fail over.

- Safer restores: Mount the standby and copy back without touching production.

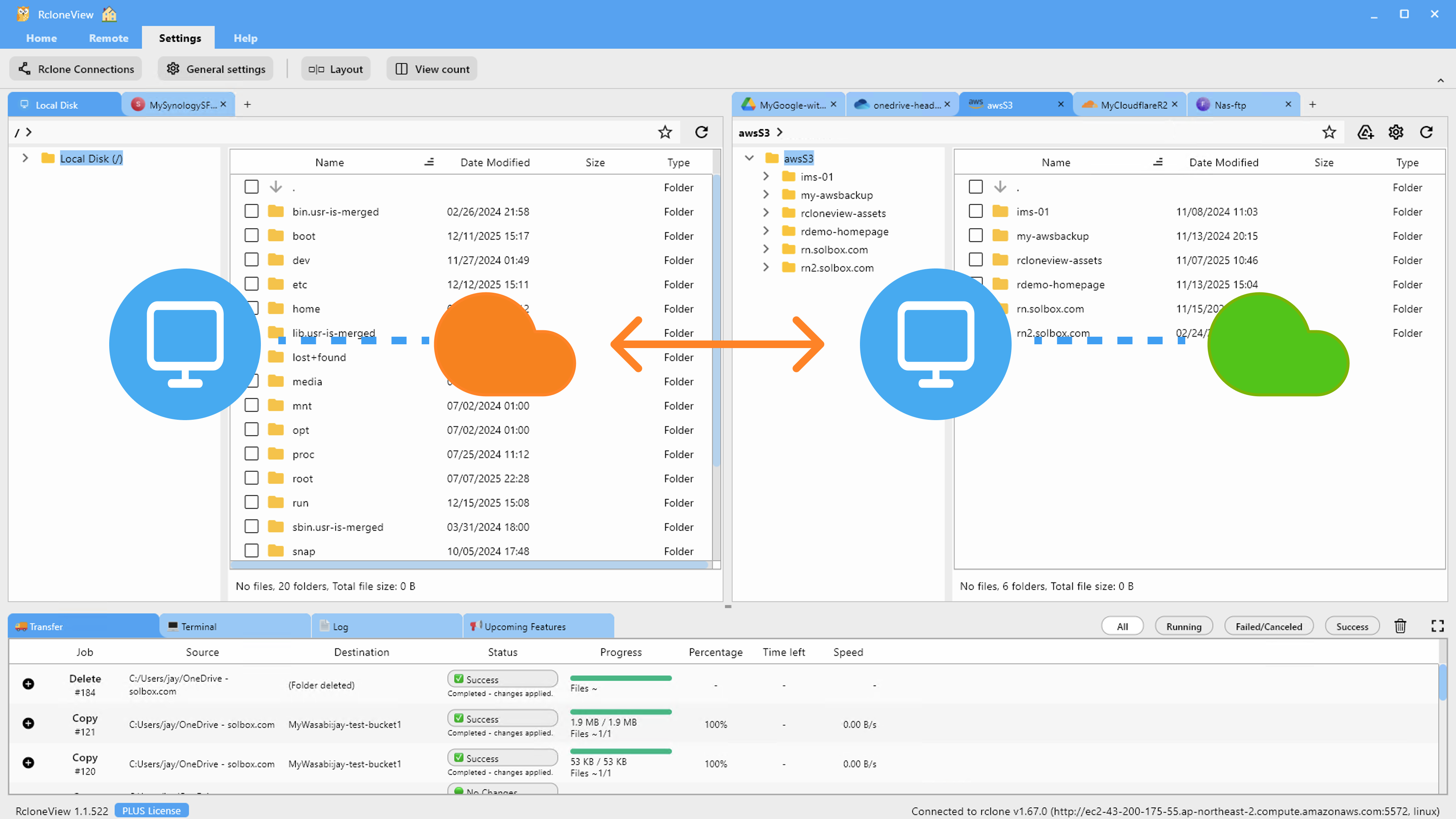

Strategy and architecture

[Primary cloud/local/NAS] --(RcloneView scheduled Sync)--> [Standby cloud/region]

\

--(Weekly Compare)--> [Drift report]

- Primary: where apps write (S3 bucket, OneDrive site, GDrive workspace, NAS).

- Standby: another region/provider with versioning (R2/Wasabi/S3/B2).

- Control: RcloneView runs sync on intervals; Compare checks integrity; Mount enables rapid access during failover.

Prerequisites

- Two remotes configured in RcloneView (e.g.,

s3:prod-bucketandr2:standby-bucket). - Versioning enabled on the standby for rollback safety.

- IAM/API permissions for list/read/write on both sides.

- Bandwidth window for scheduled replication (nightly or hourly).

Step 1: Build the baseline sync job

- Create a Sync job: Source = primary, Destination = standby.

- Use one-way Sync to mirror new/updated files; keep deletes if you want strict parity.

- Add filters for noisy paths (e.g., cache/temp) in the Filtering step.

- In Advanced Settings, adjust transfer counts and enable checksum comparison if both sides support hashes.

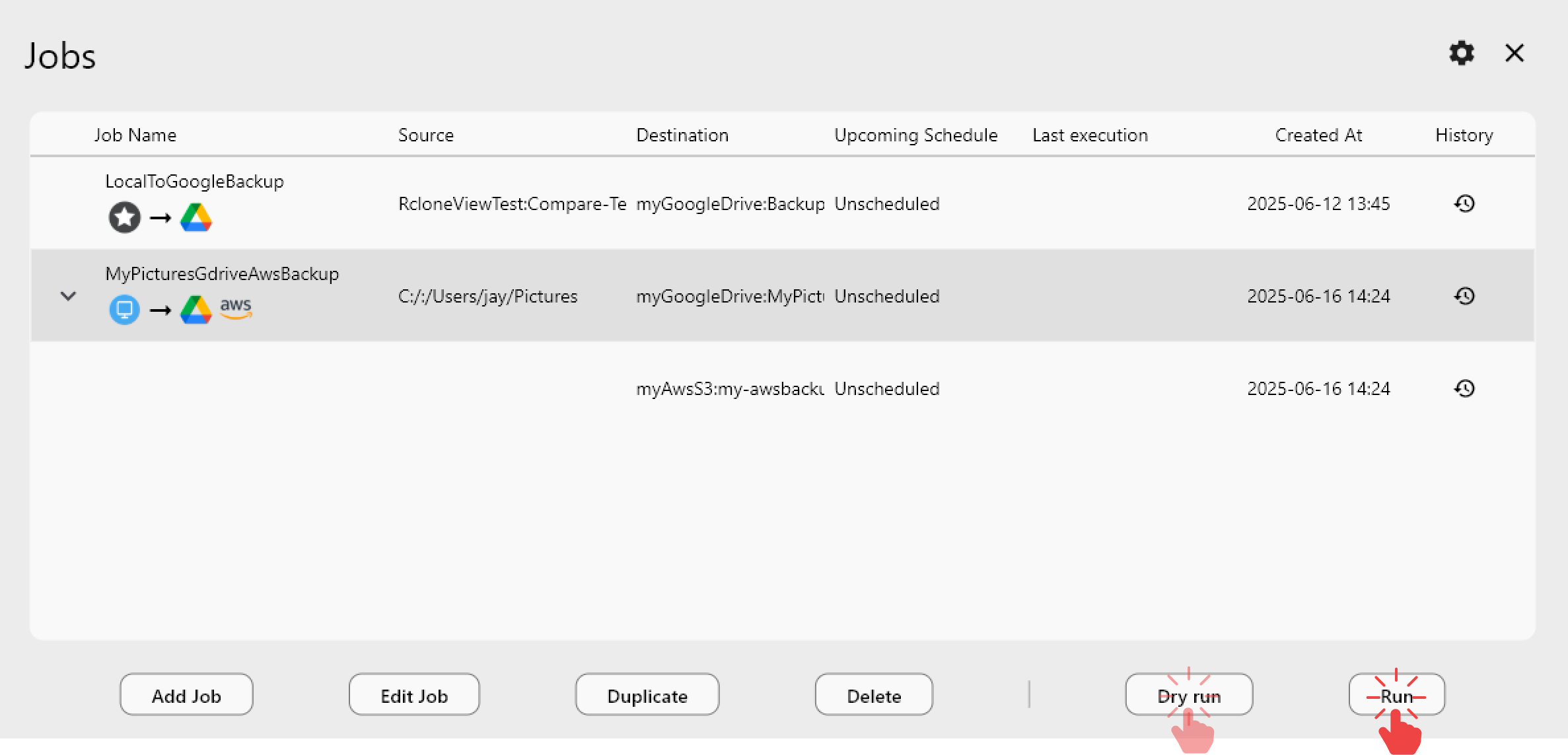

- Save the job so the same settings apply to every run (Job Manager).

Step 2: Schedule continuous updates

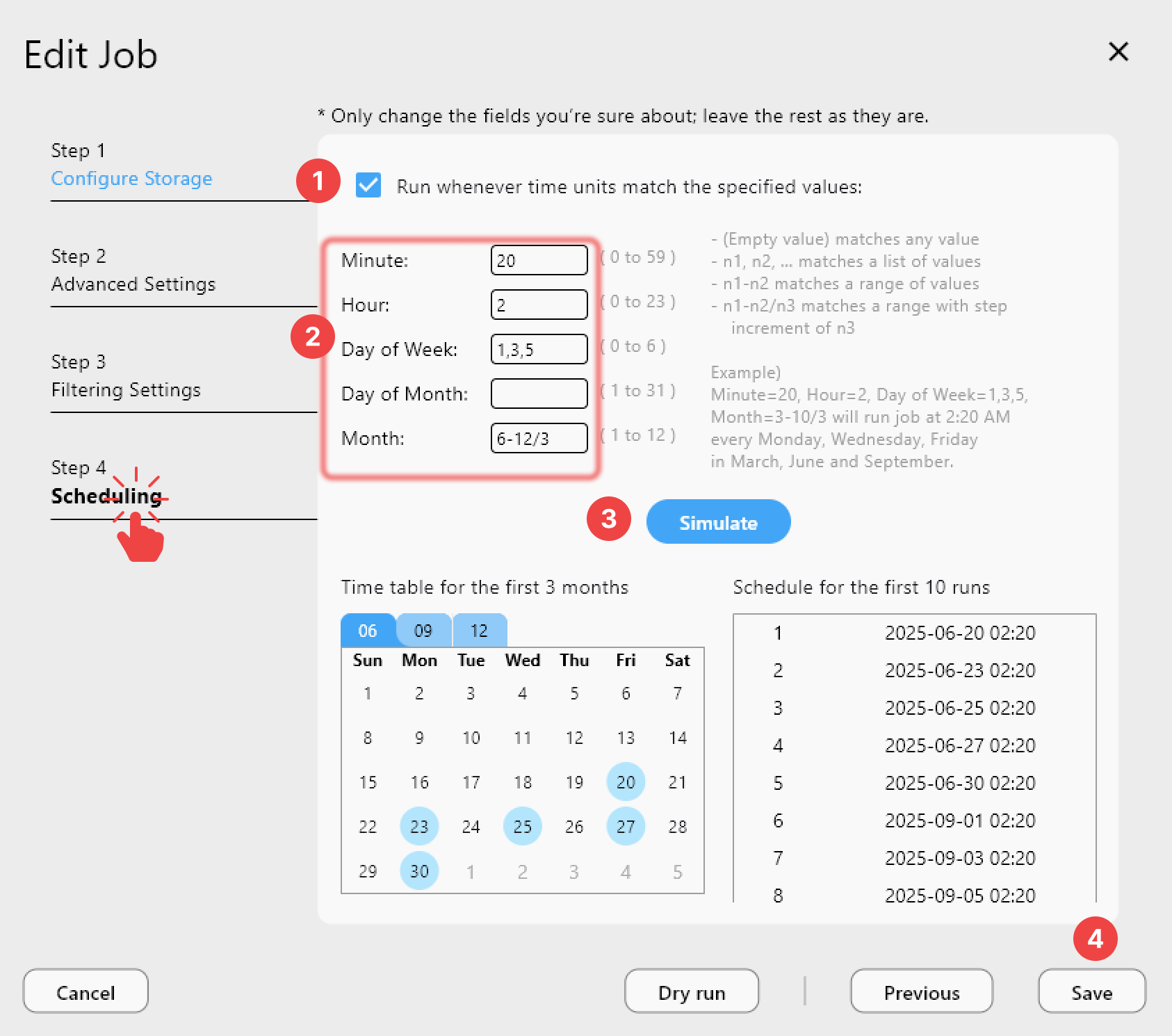

- In the Job wizard (Step 4: Scheduling, Plus license), enable scheduling for the DR job.

- Choose cadence: hourly for app data, nightly for archives, and use Simulate to preview upcoming runs.

- Set retry attempts in Advanced Settings for flaky links.

- Keep a manual weekly Compare to detect drift early.

Step 3: Verify and monitor

- Use Compare to ensure object counts align before declaring the standby ready.

- Review Job History for failures or retries and rerun the job if a window was missed.

- Keep versioning on the standby so accidental deletes can be recovered.

Step 4: Failover playbook

- Mount the standby: use Mount Manager to mount the destination remote to a stable path/drive letter.

- Point workloads to the mounted path or to the standby bucket endpoint.

- Keep the primary in read-only or offline until incident triage is done.

Tuning tips

- Latency-sensitive apps: lower transfer counts in Advanced Settings and schedule during low traffic.

- Compliance: keep versioning on the standby and export Job History for audits.

- Cost control: exclude staging/temp folders via Filters and apply lifecycle policies on the standby cloud.

- Multi-cloud: run separate jobs if you need two standbys (e.g., R2 + Wasabi) from the same primary.

Troubleshooting checklist

- Mismatched counts: rerun Compare and review Job History for skipped items; confirm versioning is on.

- Permission errors: ensure API keys allow list/read/write on both clouds.

- Restore deletes data: use Copy (not Sync) when bringing data back to production.

Keep your standby warm, tested, and ready so failover is a switch—not a scramble.