Accelerate Large Cloud Transfers: Boost Speed & Stability in RcloneView

Move terabytes between clouds faster by tuning RcloneView’s parallelism, chunk sizes, and retry logic—no CLI scripts required.

Why performance tuning matters for enterprise migrations

When copy windows are tight, every minute counts. Slow or unstable transfers can:

- Delay product launches or compliance deadlines.

- Inflate egress bills as stalled jobs retry inefficiently.

- Leave teams juggling ad-hoc scripts instead of a consistent GUI workflow.

RcloneView builds on the proven rclone engine so you can optimize speed visually:

- Configure rclone parallel transfers per job.

- Adjust chunked uploads for S3, Wasabi, Cloudflare R2, Backblaze B2, and more.

- Monitor throughput and retries from Job History—then iterate without touching the CLI.

Primary keywords: faster cloud sync, optimize transfer speed, rclone parallel transfers, chunked uploads.

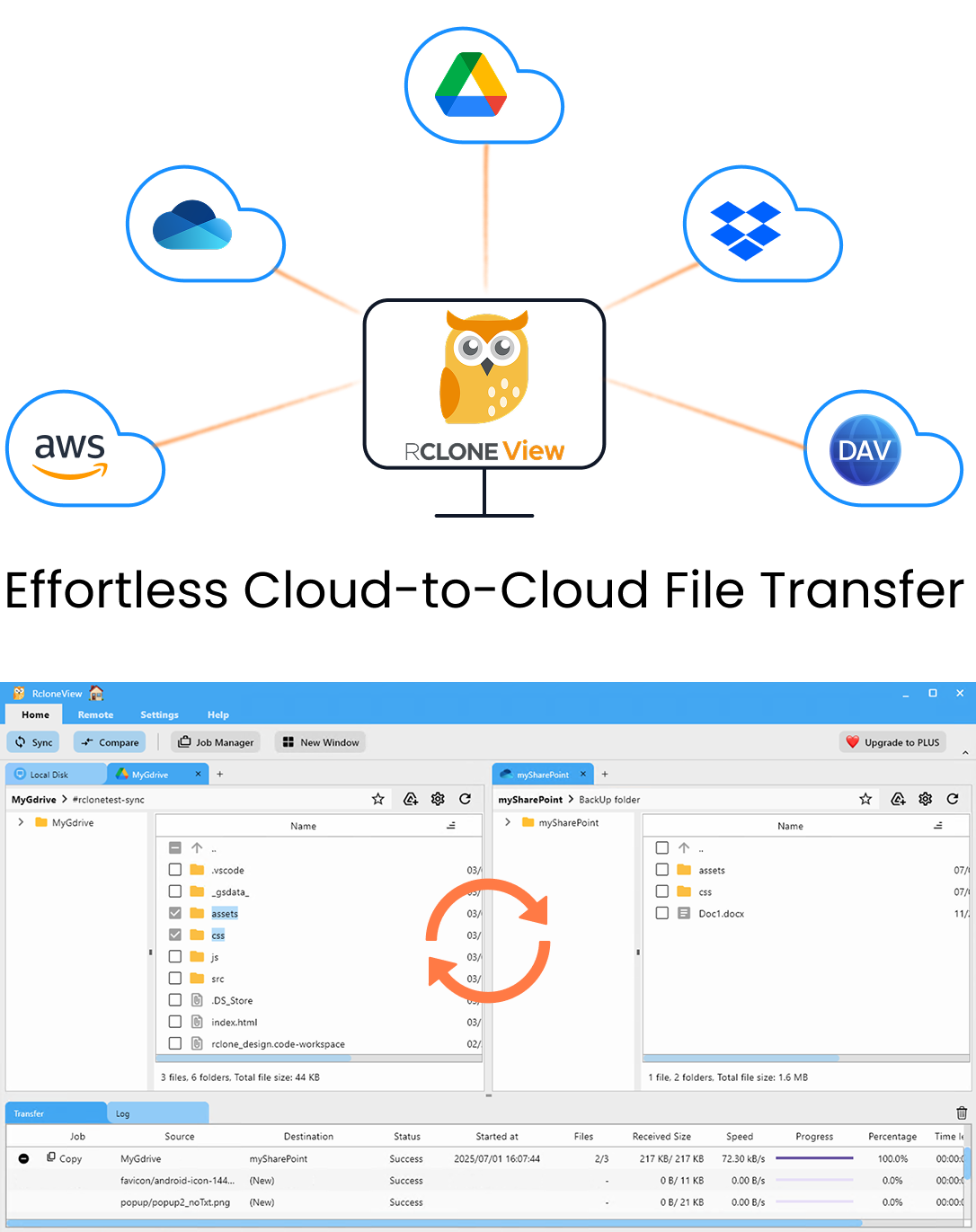

Manage & Sync All Clouds in One Place

RcloneView is a cross-platform GUI for rclone. Compare folders, transfer or sync files, and automate multi-cloud workflows with a clean, visual interface.

- One-click jobs: Copy · Sync · Compare

- Schedulers & history for reliable automation

- Works with Google Drive, OneDrive, Dropbox, S3, WebDAV, SFTP and more

Free core features. Plus automations available.

Step 1 – Baseline your transfer path

- Identify source/destination latencies: Run small test copies between NAS ↔ S3 ↔ R2 to understand RTT.

- Check provider limits: Some services cap concurrent multipart uploads; note their thresholds.

- Audit network uplinks: Ensure VPNs, firewalls, or SD-WAN appliances allow sustained throughput.

- Collect sample metrics: Use RcloneView’s Job History to capture MB/s, errors, and retry counts before tuning.

Step 2 – Tune concurrency inside RcloneView

- Open your Job → Advanced Settings.

- Increase

--transfersto enable more parallel file streams (start with 8–16). - Adjust

--checkersso metadata checks keep up (usually same as transfers). - For high-latency routes, raise

--multi-thread-streamsfor faster single-file throughput. - Save and rerun with Dry Run disabled to measure impact.

Rule of thumb: double transfers until you hit either provider throttling or your LAN uplink limit, then back off by 20%.

Step 3 – Optimize chunked uploads

S3-compatible clouds (Amazon S3, Wasabi, DigitalOcean Spaces)

- Set

--s3-chunk-size(e.g., 64M or 128M) to reduce round trips. - Increase

--s3-upload-concurrencyif you have CPU headroom. - Enable

--s3-disable-checksum=falsewhen data integrity matters more than raw speed.

Cloudflare R2 & Backblaze B2

- Tune

--chunk-sizeand--upload-cutoffto ensure large files always use multipart uploads. - Watch provider quotas; extremely high concurrency can trigger rate limiting.

NAS or local storage

- Enable

--fast-listfor huge directory scans. - Use

--buffer-sizelarge enough to keep drives busy (e.g., 32M+).

Step 4 – Stabilize long-running jobs

- Retries: Set

--retries 10and--low-level-retries 20for flaky links. - Backoff: Enable

--retries-sleepto avoid hot-loop failures on providers with temporary 429s. - Partial uploads: Turn on

--resyncchecks if you often stop/resume jobs mid-transfer. - Checksums: Use

--checksumfor critical archives to prevent silent corruption_even if it adds CPU overhead. - Notifications: Pair jobs with Slack/email alerts so you know when performance drops.

Monitoring and continuous improvement

- Tag jobs (

[PerfTest] S3↔R2 4TB) so it’s easy to compare iterations. - Export Job History weekly and chart throughput over time.

- Document winning configs (chunk size, concurrency, throttling) in your runbooks.

- Share presets with teammates by cloning jobs—no more copy/paste CLI flags.

- Schedule quarterly reviews to ensure settings still match provider limits and new workloads.

FAQs

Q. Do these optimizations require editing rclone.conf manually?

A. No. Every flag mentioned above exists inside RcloneView’s job editor; the GUI writes the config for you.

Q. What if increasing transfers causes throttling?

A. Lower the values incrementally and enable --bwlimit during business hours so critical apps keep their bandwidth.

Q. Can I mix chunk sizes within one job?

A. Each job uses a single chunk-size configuration. Create separate jobs per dataset if different tuning is required.

Q. How do I prove improvements to leadership?

A. Export before/after Job History logs and highlight the reduced duration plus fewer retries or errors.