Automate Your Backup Routine: Schedule Daily Sync Jobs Across Clouds

Turn nightly backups into a set-and-forget workflow with RcloneView’s scheduler and visual job controls.

Why automated cloud backup converts

“Automated cloud backup” is one of the highest-intent search terms for storage tools. Teams want:

- Predictable recovery points without manual starts.

- Multi-cloud safety—copy data to S3, Wasabi, Cloudflare R2, or B2.

- Auditable history to prove compliance.

- GUI-first control so ops and non-CLI teammates can manage schedules.

RcloneView rides on the rclone engine but wraps it with Jobs, Compare, and scheduling so you can automate backups visually.

Keywords to include: scheduled cloud sync, automate cloud transfers, daily backup app, RcloneView jobs.

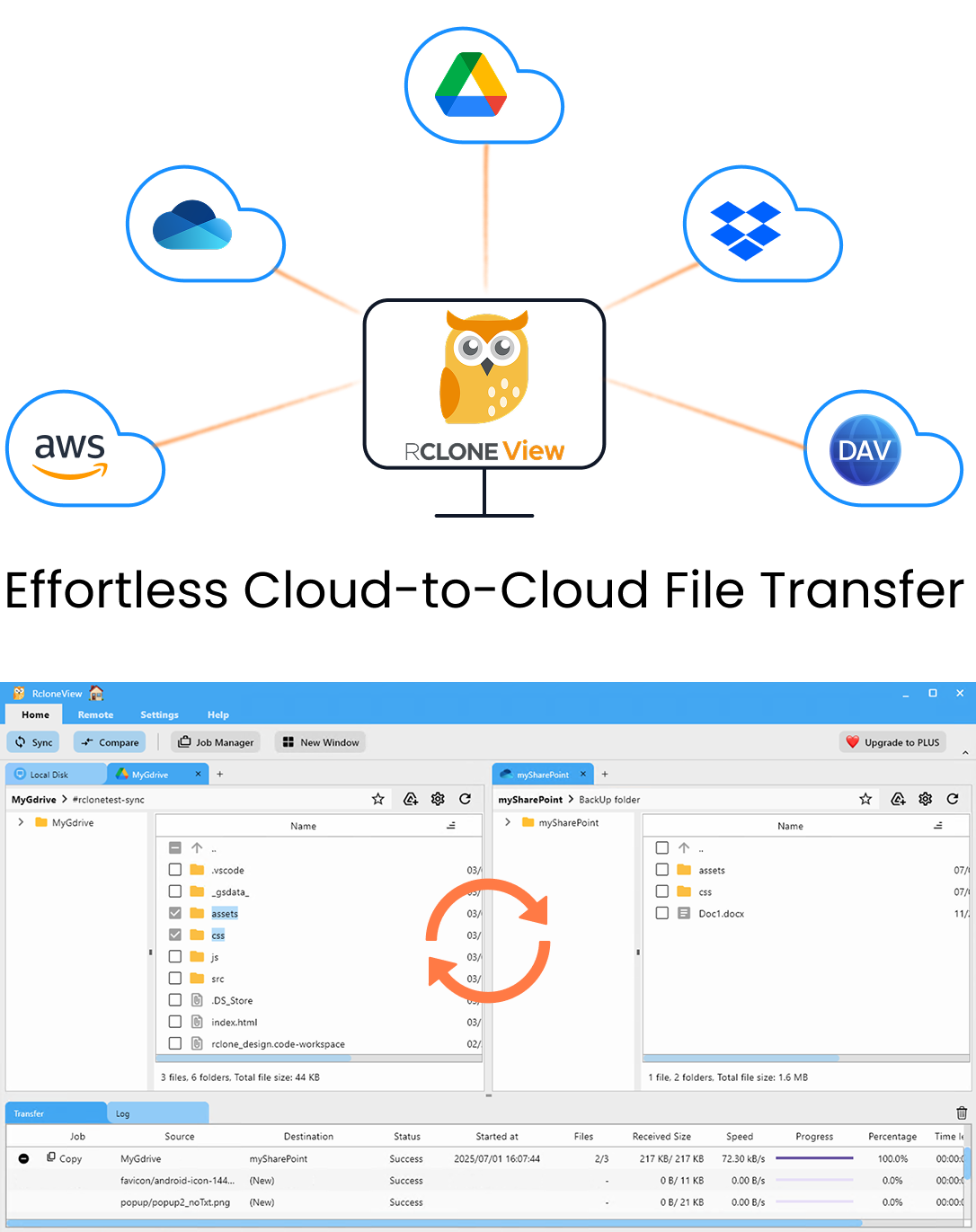

Manage & Sync All Clouds in One Place

RcloneView is a cross-platform GUI for rclone. Compare folders, transfer or sync files, and automate multi-cloud workflows with a clean, visual interface.

- One-click jobs: Copy · Sync · Compare

- Schedulers & history for reliable automation

- Works with Google Drive, OneDrive, Dropbox, S3, WebDAV, SFTP and more

Free core features. Plus automations available.

Reference setup

- Sources: NAS shares, on-prem file servers, Google Drive/OneDrive/Dropbox.

- Targets: Amazon S3/Glacier, Wasabi, Cloudflare R2, Backblaze B2, or another S3-compatible.

- Network: Ensure outbound HTTPS and stable bandwidth during your backup window.

- Permissions: Create least-privilege API users for each destination bucket.

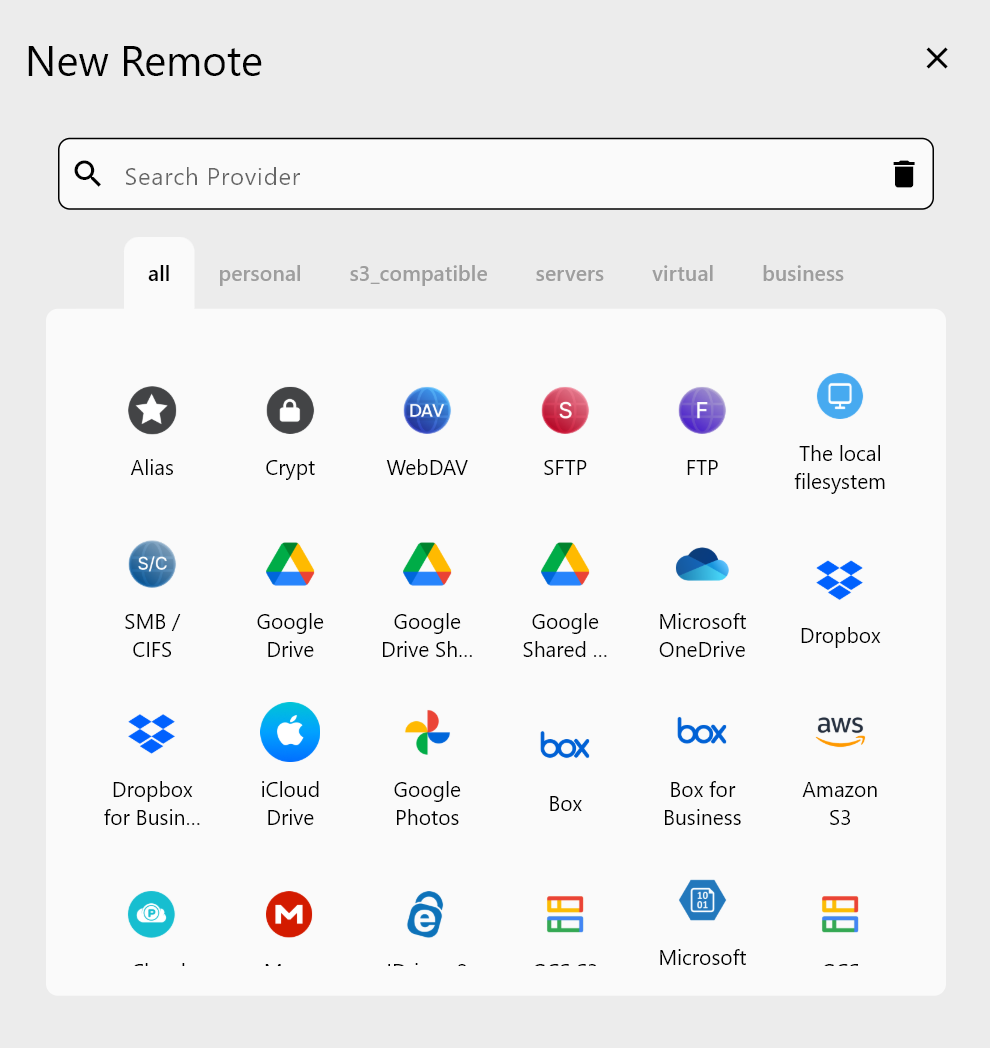

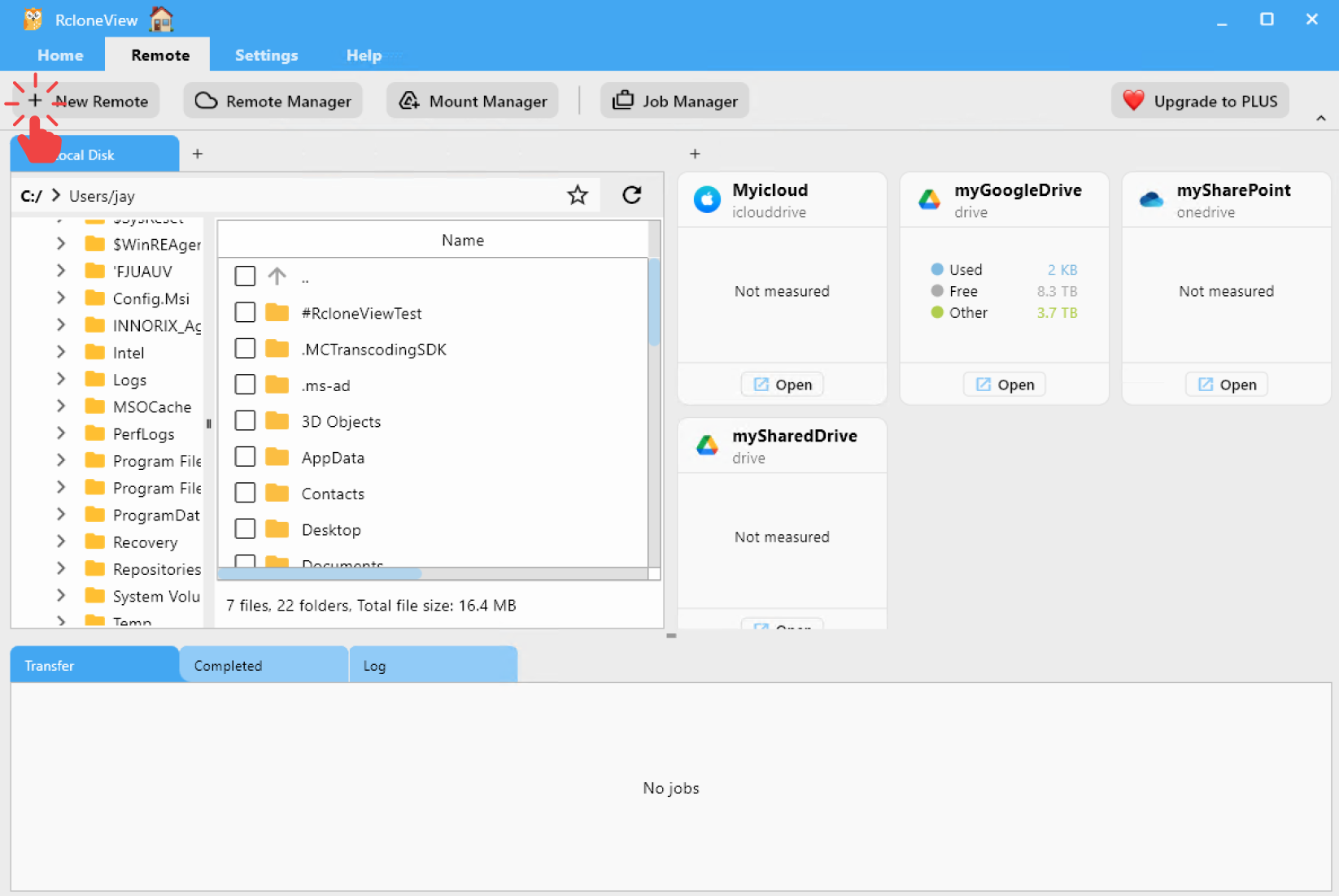

Step 1 – Add remotes in RcloneView

- Open RcloneView →

+ New Remote. - Choose the backend type (S3, R2, B2, Google Drive, OneDrive, Dropbox, WebDAV/SMB for NAS).

- Name them clearly (

NAS_Main,S3_Backup,R2_Secondary). - Confirm connectivity in the Explorer pane.

🔍 Helpful links:

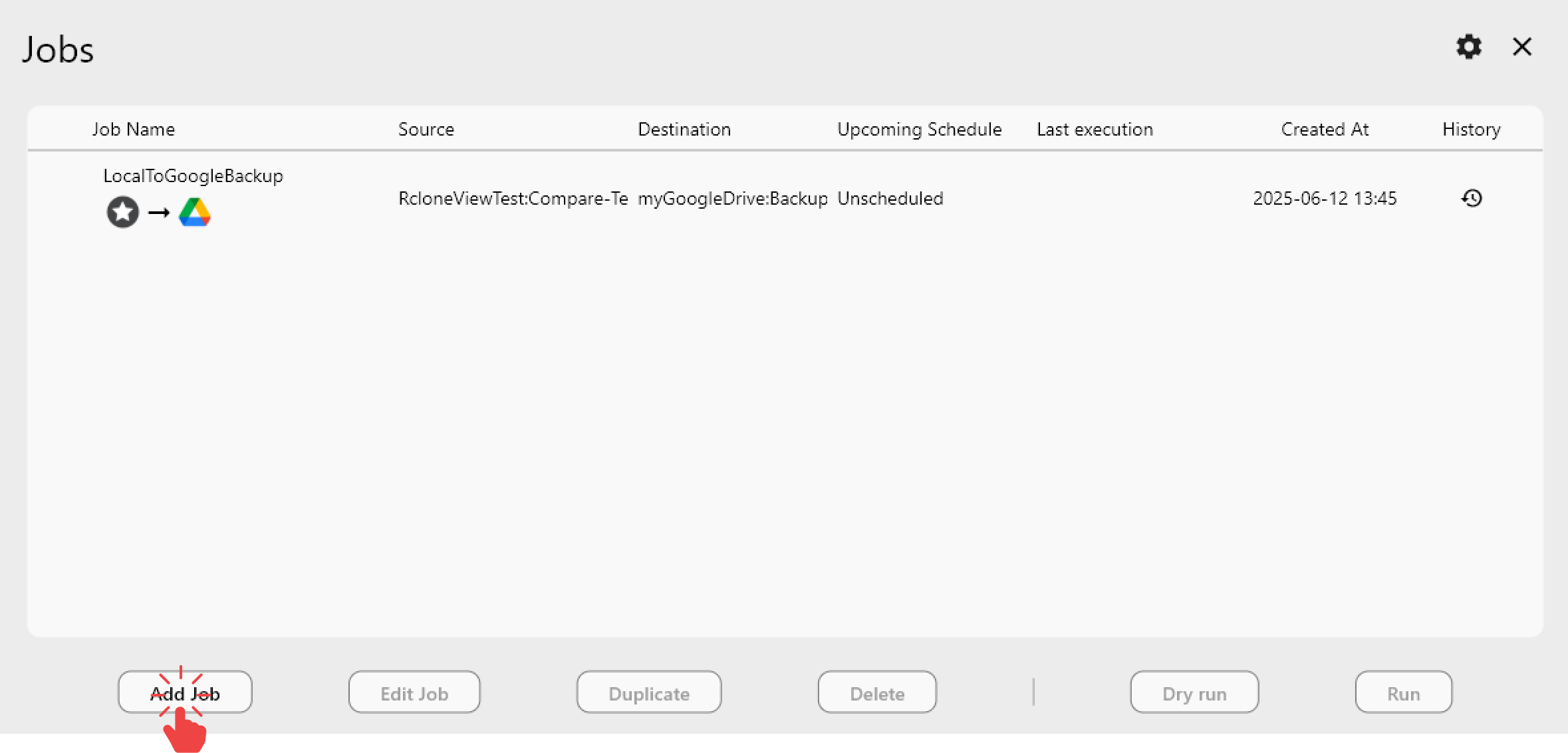

Step 2 – Create a daily backup job

- On the main screen, go to Home → Job Manager → Add Job.

- Pick your source and destination, then choose Sync to keep a mirrored copy.

- Run a Dry Run to preview what will change before the first real execution.

- Save the job with a descriptive name:

[Daily] NAS→S3 Backup.

Tip: If you need versioned backups, set

--backup-dirto a dated prefix (e.g.,/backups/{date}) so older files stay preserved.

👉 Learn more:

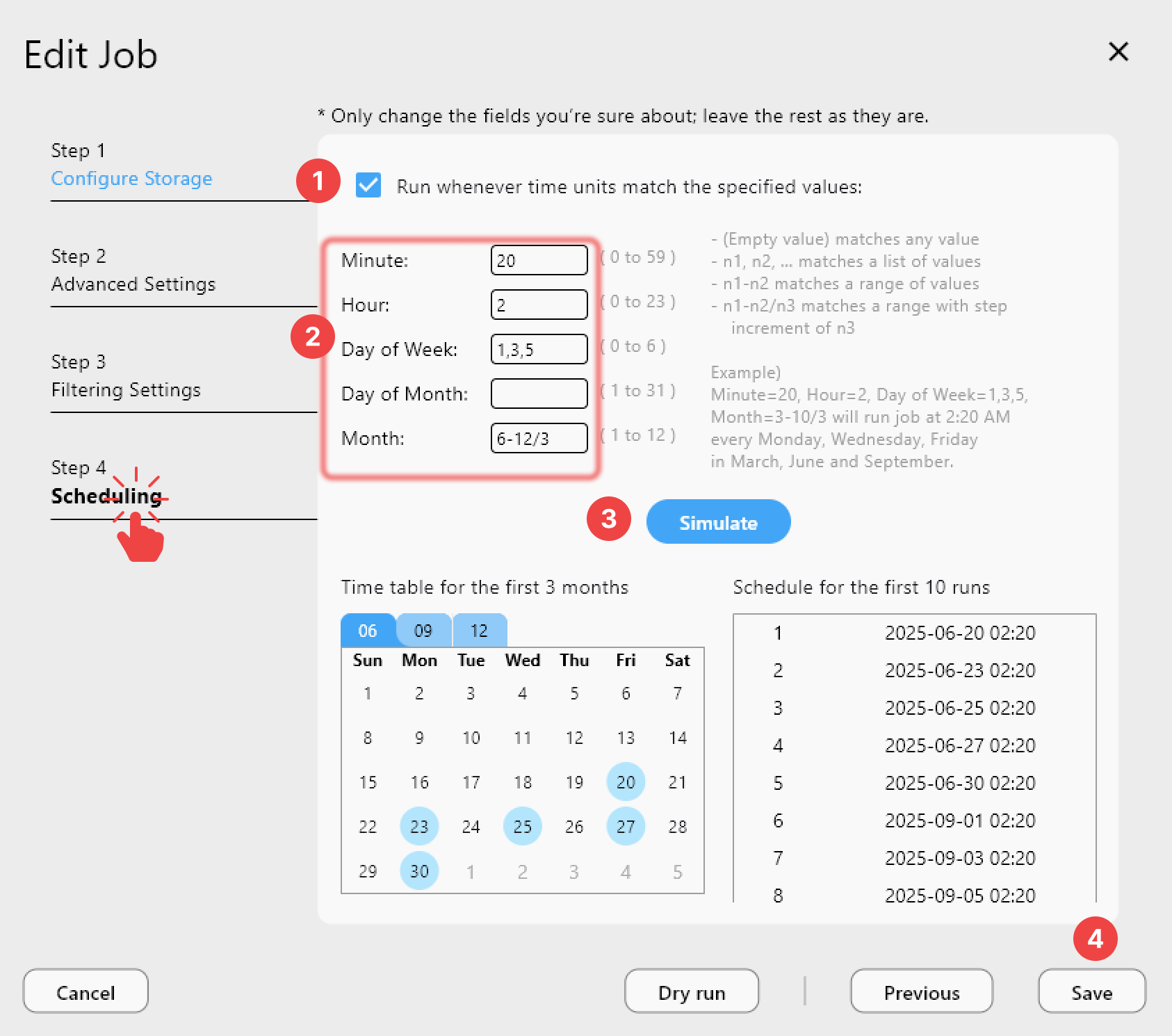

Step 3 – Schedule and throttle

- Open the job → Scheduling. Select Minute, Hour, Day of Week, Day of Month, and Month to set your cadence.

- Click Simulate to preview the next run times and confirm the pattern.

- Adjust bandwidth limits for business hours, then remove caps overnight.

- Configure notifications (email/Slack) for success, warnings, or failures.

- Set retry and backoff options for unreliable links.

👉 Learn more: Job Scheduling and Execution

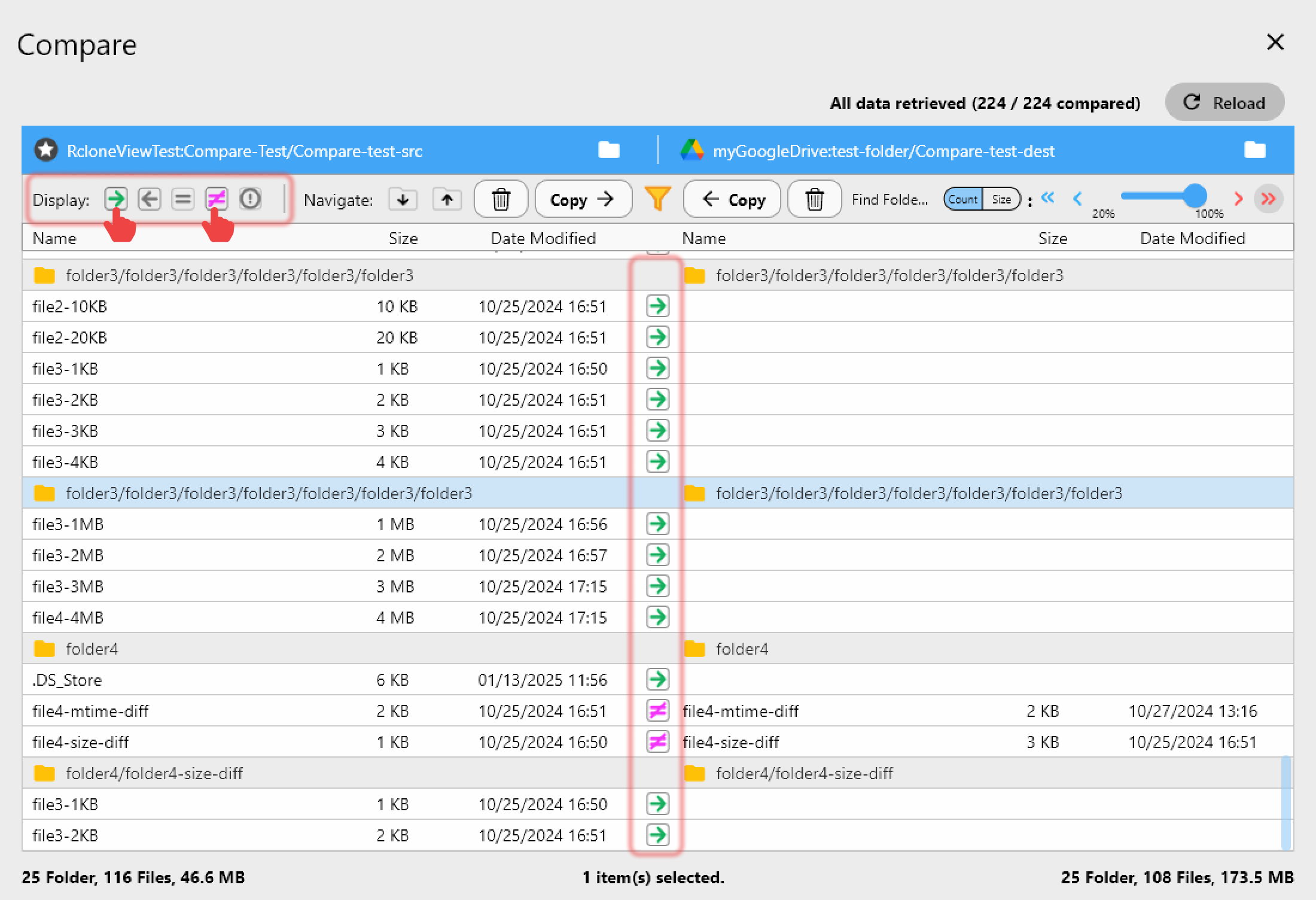

Step 4 – Monitor and audit

- Job History: Track duration, throughput, and errors.

- Compare: Run periodic compares to confirm parity between source and backup.

- Logs: Export logs weekly for compliance (RPO/RTO evidence).

- Health checks: Quarterly restore tests to a staging bucket or NAS.

👉 Learn more: Compare Folder Contents

Pro tips for rock-solid schedules

- Stagger multiple jobs to avoid API throttling (e.g.,

[Daily] NAS→S3at 1am,[Daily] S3→R2at 3am). - Use

--checksumfor critical archives; prefer--size-onlyfor speed-sensitive runs. - Keep

--max-ageor include/exclude filters to limit noisy directories. - Clone a proven job as a template for new teams or regions—settings stay consistent.

- Label jobs by tier:

[Primary Backup],[Offsite Copy],[Archive Glacier].

FAQs

Q. Does scheduling require the app to stay open?

A. RcloneView’s background service runs jobs; keep it active or deploy on a small VM/NAS that stays online.

Q. Can I automate multi-hop backups (e.g., NAS→S3→R2)?

A. Yes. Chain two jobs with different schedules and ensure the second starts after the first window.

Q. What about deletion safety?

A. Start with --backup-dir or --max-delete thresholds until you’re confident in the sync pattern.

Q. How do I prove backups happened?

A. Export Job History weekly and archive it with your compliance reports.